How modern DevOps is done. Part 2: wiring up CI/CD!

In the previous article I've covered the process of Docker image building, and then pushing it to the repository. In real life nobody does it manually, we let the continuous delivery work for us. So let's create a pipeline or two that automate the process.

I will be using GitHub actions, it will do just fine for the demo project. For a real life app I would encourage taking a look at DroneCI, as it can be self-hosted and seamlessly integrated with K8s, for that could be a crucial factor for big companies to achieve cost saving and keeping their secrets in-house.

The pipelines are typically wired up on specific events that GitHub triggers. In order to understand what events to use, I need to choose the branching model first. There are two main options:

I'll go with the second option. The master (main or trunk) branch will be referred as a production branch.

So, three action pipelines must be created:

- Lint and test the app on every push to a feature branch

- Build a staging image every time a feature branch is merged to the production branch

- Build a production image every time a new release is created

For now, I will only build images, not deploy them, since at this point there is even no cluster to deploy to. I'll improve the pipelines later down the road.

Some prefer deploying to production on merge instead, but I personally find this ultimately unsafe, since there would be little opportunity to prevent the mis-triggered deployment from happening, once it has started.

On every push to a feature branch linter and unit tests must be executed, to maintain technical excellence and code quality. Here is a pipeline for this:

name: Lint and teston:pull_request:jobs:lint-test:name: Run linter, unit testsruns-on: ubuntu-latestdefaults:run:working-directory: ./devops/appsteps:- uses: actions/checkout@v2.3.1with:fetch-depth: 0- name: Detect changesuses: dorny/paths-filter@v2id: filterwith:filters: |app:- 'cmd/**'- 'go.mod'- uses: actions/setup-go@v4with:go-version: '1.21.x'cache: false- name: Run lintuses: golangci/golangci-lint-action@v3.4.0with:version: v1.55.1args: -v --timeout=10m0s --config ./.golangci-lint.ymlskip-cache: trueworking-directory: ./devops/app- name: Run unit testsrun: |go test -short -mod=mod -v -p=1 -count=1 ./...

To avoid duplication across many pipelines it's a good idea to write a bash script that builds and push images. Let's take a look at this particular one.

#!/usr/bin/env bashREGION=asia-east1-docker.pkg.devPROJECT=go-app-390716APP=devops-appwhile getopts a:t:e: flagdocase "${flag}" ina) ACTION=${OPTARG};;t) TAG=${OPTARG};;e) ENV=${OPTARG};;*) exit 1esacdoneIMAGE="${REGION}"/"${PROJECT}"/devops-"${ENV}"/"${APP}":"${TAG}"if [ "${ACTION}" = "build" ]thendocker build -t "${IMAGE}" .fiif [ "${ACTION}" = "push" ]thengcloud config set project "${PROJECT}"gcloud auth configure-docker "${REGION}"docker push "${IMAGE}"fi

The script takes three arguments: the action ("build" or "push"), the image version (part of the tag) and the environment and creates a fully denoted image tag using the region, the project name and the application name.

It's a good practice to have separated projects for staging and live environments, due to safety reasons and ability to define access policies in a more granular fashion. However, for the demo project its fine to keep images in sub-folders instead.

I also add the script as an action to the Makefile:

build_image:@./scripts/build-push-image.sh -a build -t $(tag) -e $(env)push_image:@./scripts/build-push-image.sh -a push -t $(tag) -e $(env)

In order to be able to push images to the registry, we need authentication. In case of GCP, one way to achieve this is to use Service Accounts. A Service Account is an unmanned type of account. Let's see how one can be created.

First, we go to the "service accounts" section of IAM.

We click "Create Service Account" and fill the form out:

In practice, you may want to have a dedicated role for CICD-related service accounts to give more granular access, but for the purpose of this article the Owner will do just fine.

Then we go inside and add a new JSON access key. The key will be downloaded then. After that we take the contents of the JSON file and base64 encode it:

base64 ~/Downloads/go-app-390716-d7f325da7e19.json > ~/sa_key.txt

Then we add a new secret with the name GCP_SERVICE_ACCOUNT and the encoded file as a value.

Another pipeline is executed each time a feature branch is merged to master. At this point it's a little too late to run linter, but it is still worth running unit tests. At the end a Docker image is built and pushed to the registry.

Now we need to decide what kind of image tag to use. Some engineers use the latest tag for staging deployments, but I've

seen problems with this approach. A better way would be to use the pull request commit hash as the image tag. The original

hash is too long, so we shorten it to 7 symbols. This is the same as what Github does, so it eventually becomes easy to find

out which image was built after which PR.

name: Test, build and deploy to Staging environmenton:push:branches: [master]jobs:test-build-push:name: Run unit tests, build and push a new image to Stagingruns-on: ubuntu-latestdefaults:run:working-directory: ./devops/appsteps:- uses: actions/checkout@v2.3.1with:fetch-depth: 0- name: Detect changesuses: dorny/paths-filter@v2id: filterwith:filters: |app:- 'cmd/**'- 'go.mod'- uses: actions/setup-go@v4with:go-version: '1.21.x'cache: false- name: Run testsrun: |go test -short -mod=mod -v -p=1 -count=1 ./...- name: Get image tagid: commit_hashrun: |SHORT_COMMIT_HASH=$(git rev-parse --short=7 "$GITHUB_SHA")echo "IMAGE_TAG=$SHORT_COMMIT_HASH" >> $GITHUB_ENVecho "Commit SHA: $GITHUB_SHA"echo "Short commit hash: $SHORT_COMMIT_HASH"- name: GCP Authrun: |echo "${{secrets.GCP_SERVICE_ACCOUNT}}" | base64 -d > ./google_sa.jsongcloud auth activate-service-account --key-file=./google_sa.json- name: Build new imagerun: |make build_image tag=$IMAGE_TAG env=stg- name: Push the imagerun: |make push_image tag=$IMAGE_TAG env=stg

This pipeline only builds the image, the deployment will happen later using Spinnaker.

If by chance you have a canary environment, you can rig up automatic deployments to canary each time there is a merge to master. Personally I prefer keeping this part of the process manual, as in case of the process is automated, it poses a risk of accidental deployments, that, once triggered, becomes difficult to abort in good time.

I strongly believe that live deployments should be as much perceived and deliberate as possible.

So,

name: Build for Live environmenton:release:types: [published]jobs:validate_tags:runs-on: ubuntu-latestenv:GITHUB_RELEASE_TAG: ${{ github.ref }}outputs:hasValidTag: ${{ steps.check-provided-tag.outputs.isValid }}steps:- name: Check Provided Tagid: check-provided-tagrun: |if [[ ${{ github.ref }} =~ refs\/tags\/v[0-9]+\.[0-9]+\.[0-9]+ ]]; thenecho "::set-output name=isValid::true"elseecho "::set-output name=isValid::false"fibuild-push:name: Build and push the image to Liveneeds: [validate_tags]if: needs.validate_tags.outputs.hasValidTag == 'true'runs-on: ubuntu-latestdefaults:run:working-directory: ./devops/appenv:GITHUB_RELEASE_TAG: ${{ github.ref }}steps:- uses: actions/checkout@v2- name: Configure gcloud as docker auth helperrun: |gcloud auth configure-docker- name: GCP Authrun: |echo "${{secrets.GCP_SERVICE_ACCOUNT}}" | base64 -d > ./google_sa.jsongcloud auth activate-service-account --key-file=./google_sa.json- name: Extract versionuses: mad9000/actions-find-and-replace-string@3id: extract_versionwith:source: ${{ github.ref }}find: "refs/tags/"replace: ""- name: Build new imagerun: |make build_image tag=${{ steps.extract_version.outputs.value }} env=live- name: Push the imagerun: |make push_image tag=${{ steps.extract_version.outputs.value }} env=live

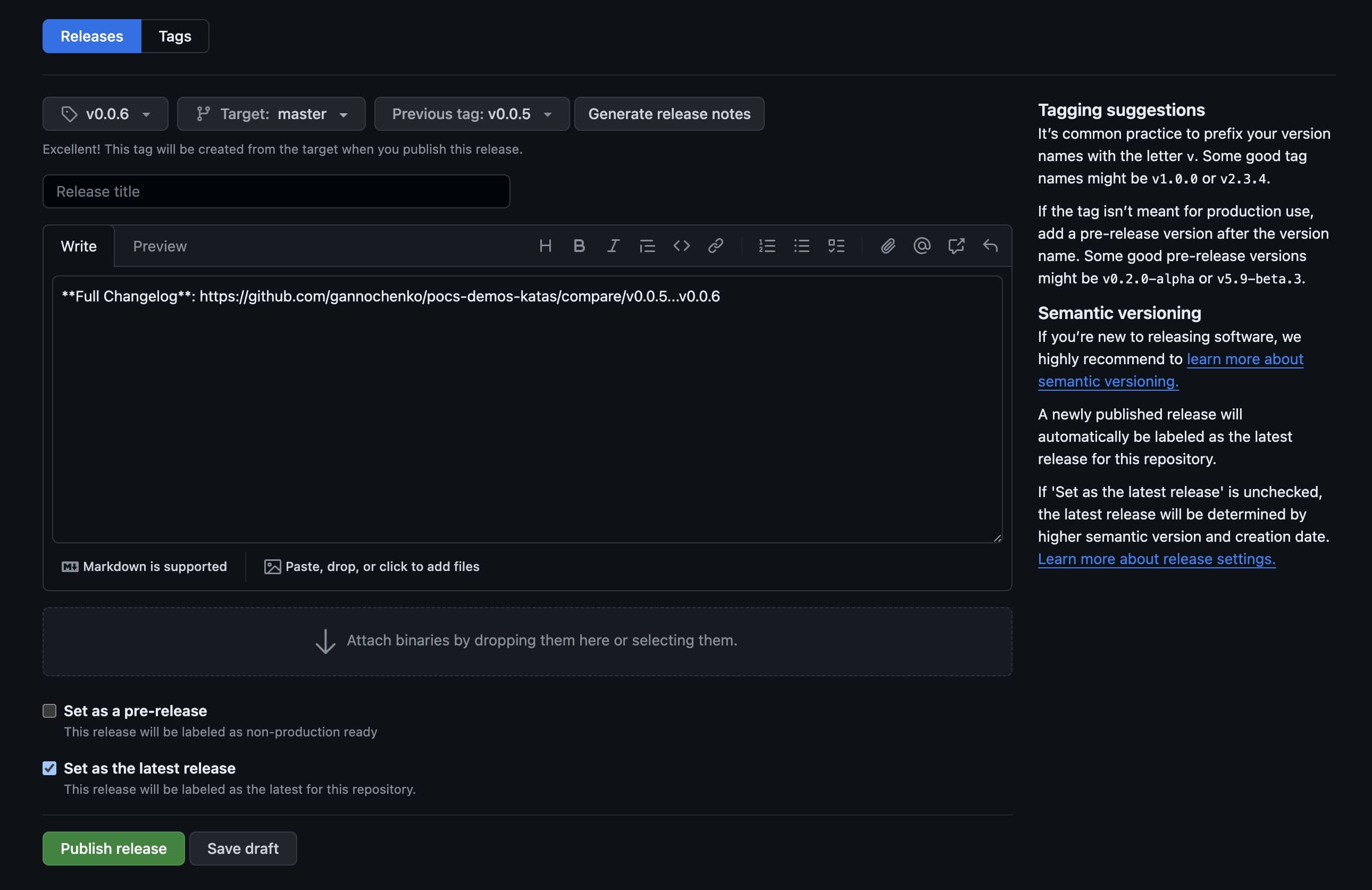

There is nothing simpler than this. Just go to the "releases" section of your repository and draft a new release.

There is a regex in the pipeline above, that only lets tag names such as vXX.YY.ZZ through. The value of the tag will also become the image tag.

And that's all for today.

In the next article we will learn how to stop creating resources manually by putting Terraform into use!

The code for this part is here. Enjoy!